Microstudies: Part 4

ChatGPT and You — How to Reclaim Lost Power in a Toxic Relationship

We all know what I think about ChatGPT, but it seems students cannot get enough of the stuff — the 2024 Stack Overflow survey was overflowing with students who reported using AI tools like ChatGPT and GitHub Copilot. Of the people who said they were learning to code, a whopping 63% of them said they used AI tools with an additional 13% saying they were planning to use them in the future. And, of these people, 71% said they thought AI tools "speed up learning".

Yeowzah! I'm not so sure about that last part. While LLM AI tools might make people feel they are being more productive, there is little evidence that this productivity is real. Even if there is some slight boost to productivity, it potentially comes at the cost of reduced code quality over time, and possibly even subtle bugs that would be hard for human code readers to detect. And, given the infancy of commonplace LLM AI tools, there are no studies looking at the long-term impact of the use of these tools on learning — particularly beyond the introductory programming stage and particularly nothing exploring the impact of insane suggestions like teaching prompts first programming.

So, is there anything to all this hype? Are the participants in the 2024 Stack Overflow survey correct? Do LLM AI tools like ChatGPT help with learning? To begin to pick apart these questions, I gave students a single question questionnaire asking about their ChatGPT usage — I then correlated their answers with their test scores.

I've heard two main anecdotal hypotheses regarding LLM AI tools like ChatGPT and students. One, that weaker students seem to use tools like ChatGPT. And two, that students who use tools like ChatGPT are disadvantaged — that they "learn less" than students who do not use ChatGPT. In this microstudy, I begin to test these hypotheses.

Specifically, the hypotheses being tested are:

: Students who report using AI tools such as ChatGPT will attain lower test scores than students who report not using AI tools such as ChatGPT.

: Students who report using AI tools such as ChatGPT will attain progressively worse test scores compared to students who report not using AI tools such as ChatGPT as the semester progresses.

Note: "AI tools such as ChatGPT" will whenceforth be referred to as simply "ChatGPT" as it is assumed this is primarily what students would be using if they report using such tools — this assumption is supported by the Stack Overflow survey with 85.6% of people using AI while learning to code saying they used ChatGPT.

Method

The data collected for this study was gathered from a CS1 course designed for students who have no prior programming experience that teaches the basics of imperative programming using the Python programming language. The course has four computer-based tests spread throughout the semester run asynchronously during students' normal lab times. Data were collected over two semesters in 2024 — with the first semester acting as a pilot for the second. The questionnaire data in this study were gathered in Test 4 for both semesters.

The Pilot

The pilot study was conducted during the summer semester (Jan – Feb) which is an intense 6-week semester (compared to the usual 15-week semester at the institution). In total, there were 84 students enrolled in the course.

Students fill in a paper attendance sheet at the start of each test that they must hand in before leaving the room. Beneath the name, ID, and seat number fields, I asked the teaching team to add the following question:

The Main Study

The main study was conducted during semester 1 (March – June) which is run over 15 weeks. In total, there were 676 students enrolled in the course.

The question given to students was not modified between the pilot and main study.

Results

I am interested in the effect of ChatGPT usage on test scores across all four tests. To analyse this, I took the average grades across all four tests for each student — ignoring missing test grades.

The Pilot

For the pilot study run during the summer semester, 62 out of the 84 enrolled students sat the test, and of those, 45 students (73%) responded to the questionnaire. Only the data of those students who responded to the questionnaire will be used further.

The mean and median test scores were 66.9% and 72% respectively.

Test Scores

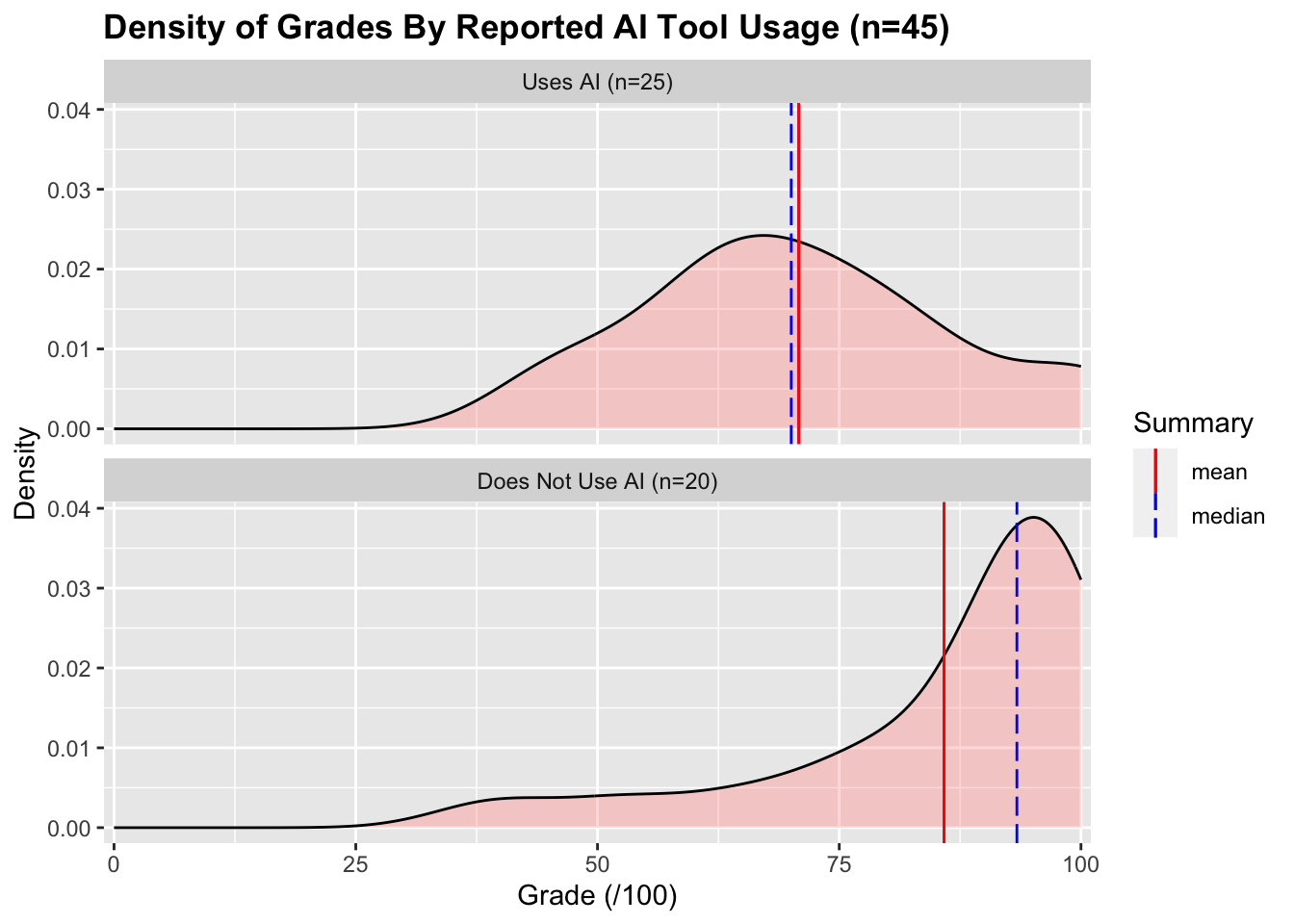

Of the 45 students who responded to the questionnaire, 25 (56%) reported using ChatGPT and 20 (44%) reported not using ChatGPT. The grade distributions for these two groups are below:

The mean and median test scores for students who reported using ChatGPT were 70.8% and 70.0% respectively. The mean and median test scores for students who reported not using ChatGPT were 85.8% and 93.3% respectively. This difference is significant1 with a large effect size2.

Change in Test Scores

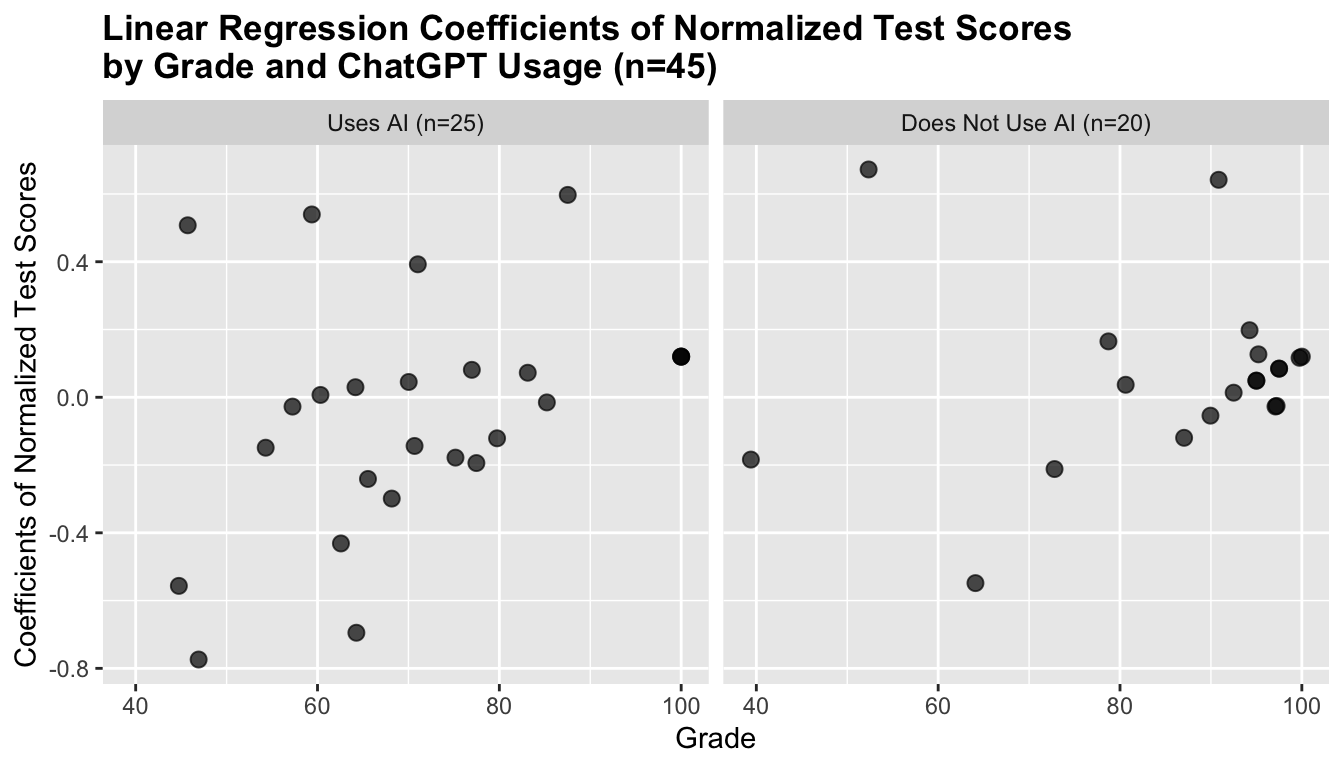

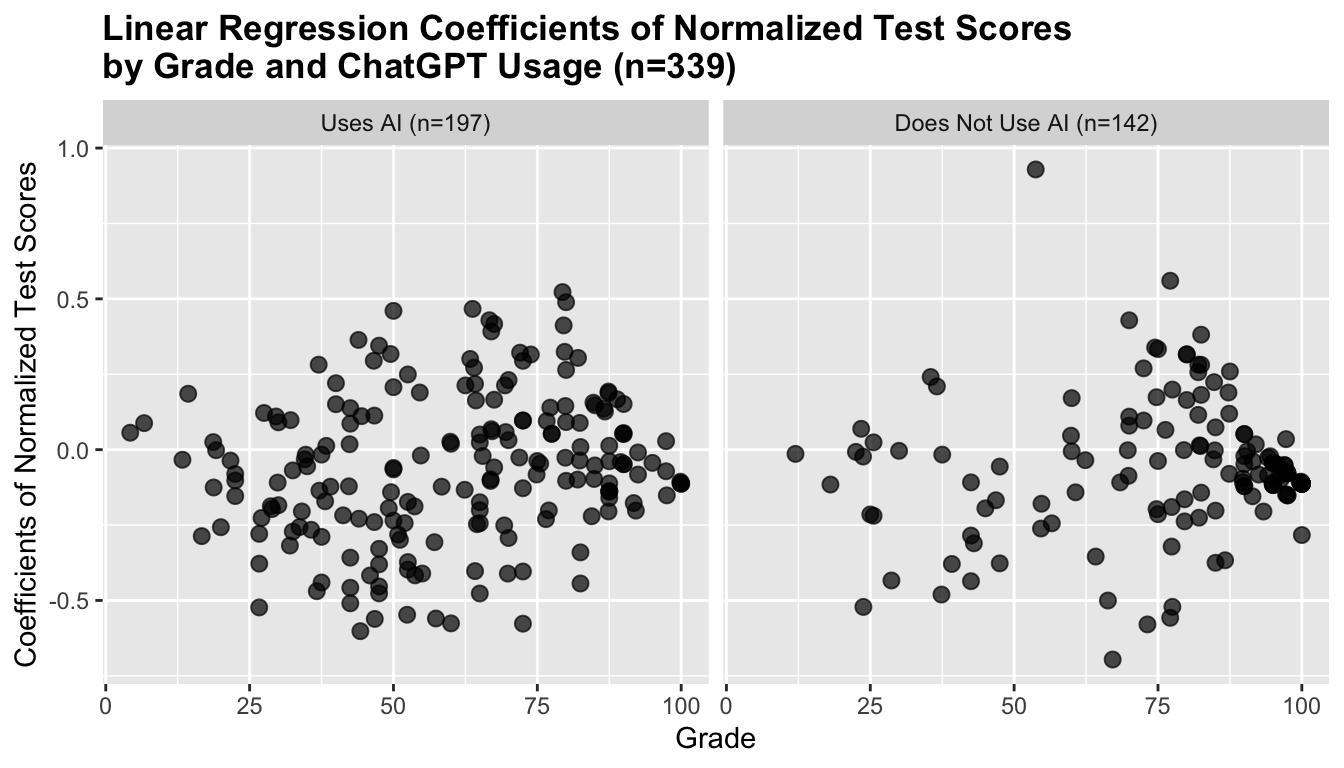

To test a change in test scores over time, the normalized test scores for each student were fit to a linear regression model. That is, the scores for each test were scaled to have a mean of zero and a unit variance, a line of best fit was then created for each student's four tests. This metric roughly gauges whether students' test scores overall improved or got worse as the semester progressed. The coefficients (slopes) of these lines against grades are reported below:

Given the small sample sizes in the pilot, a robust ANCOVA test to compare the GPT/Non-GPT groups is not performed.

The Main Study

For the main study run during semester 1, 579 of the 676 enrolled students sat the test, and of those 339 students (59%) responded to the questionnaire.

The mean and median test scores were 77.5% and 78.7% respectively.

Test Scores

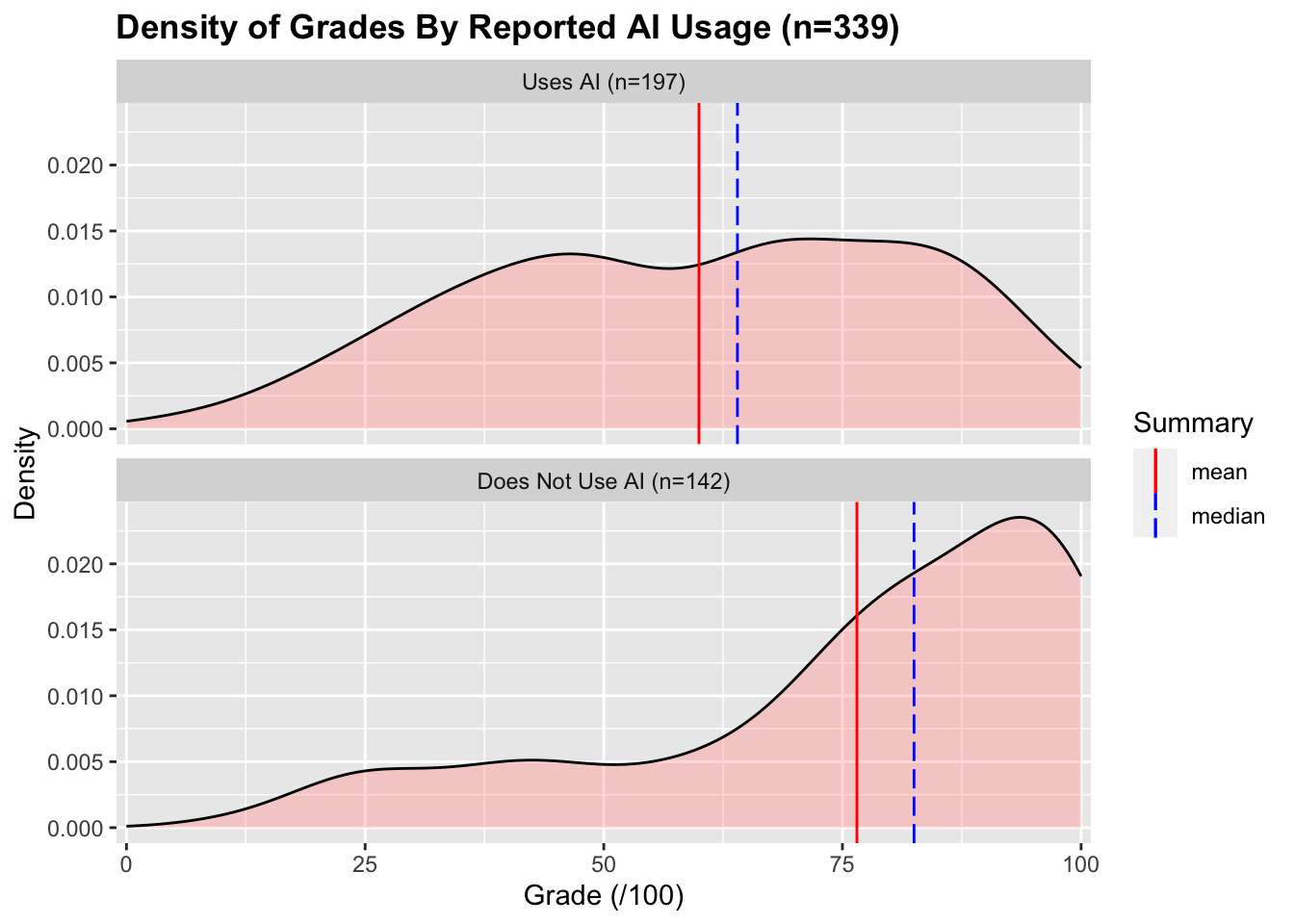

Of the 339 students who responded to the questionnaire, 197 (58%) reported using ChatGPT and 142 (42%) reported not using ChatGPT. The grade distributions for these two groups are below:

The mean and median test scores for students who reported using ChatGPT were 60.0% and 64.0% respectively. The mean and median test scores for students who reported not using ChatGPT were 76.5% and 82.5% respectively. This difference is significant3 with a medium effect size4.

Change in Test Scores

As with the pilot, the coefficients of the linear regressions of students' normalized test scores were computed. These coefficients are plotted against grades below:

A robust ANCOVA test with holm correction shows no significant difference between the coefficients of the ChatGPT and Non-ChatGPT groups when adjusted for overall grade5.

Discussion

There is a marked difference in the test scores attained by students who report using ChatGPT compared to students who report not using ChatGPT — with students who use ChatGPT attaining significantly lower test scores than those who don't. From this, I will accept .

Interestingly, when looking at how students progress through the semester, there is no difference between the ChatGPT and Non-ChatGPT group. From this, it seems the use of ChatGPT does not influence student learning and I can reject .

Another interesting note is that the proportion of students who report using ChatGPT in this study (58%) is similar to the proportion of students who reported using AI in the Stack Overflow survey (63%).

Threats to Validity

There are two significant threats to the validity of this particular microstudy.

First, the deadline for "late withdrawals" (the ability to drop out of a course without it affecting students' GPA calculations) happened between test three and four (when the data collection for this study happened) for both semesters. Typically, a significant number of the worse performing students drop out of the course just before the deadline as they are aware, by that point in the course, they are going to fail. This means a specific group (low-performing students) are underrepresented in the data collected for this study. As this group is of specific interest to this microstudy, this has likely influenced the results in some way.

Second, students were very sceptical of answering a question about ChatGPT usage. The university at which this study was conducted has, in the past, given harsher punishments for students caught cheating using tools like ChatGPT than students caught, for example, contract cheating. While the questionnaire given to students was designed in such a way that students who answered positively were not admitting to cheating, and the course coordinator gave her assurance to students before the test that their answer to the question would not in any way influence their grades, students still appeared sceptical and unconvinced. This has two implications for this study:

- The response rate for this questionnaire was low (compared to the other microstudies). And it is assumed students who did use ChatGPT were more unlikely to answer than students who didn't.

- It is likely several students lied about not using ChatGPT if they did answer the questionnaire.

Despite this, of the students who answered the questionnaire, over half of them still reported using ChatGPT.

Conclusions

So, is the hype worth it? Currently, no. There is no evidence AI boosts students' grades, and it is not the top performers who are using it. At the very least, it doesn't seem to be harming student grades over time — but this study only looked at students over a single semester in an introductory programming course. It would be interesting to see if this trend (or lack thereof) continues in higher stage courses where tools like ChatGPT may struggle to understand and provide useful completions to more complex content.

Footnotes

-

The groups are not normal. Uses AI (W=0.96, p=0.397), Does not use AI (W=0.78, p<0.001). A 1-tailed Mann-Whitney test was performed yielding: (U=128.5, p=0.003) ↩

-

A Cliff's delta effect size test gave a statistic of -0.486 with a 95% confidence interval of -0.741 to 0.107 ↩

-

The groups are not normal. Uses AI (W=0.97, p<1e-3), Does not use AI (W=0.86, p<1e-9). A 1-tailed Mann-Whitney test was performed yielding: (U=8012, p<1e-11) ↩

-

A Cliff's delta effect size test gave a statistic of -0.427 with a 95% confidence interval of -0.534 to -0.307 ↩

-

A robust ANCOVA with a trim of 0.1 comparing the regression coefficients between ChatGPT/Non-ChatGPT groups correcting for overall test score gives:

grade n1 n2 diff se CI statistic p 18.675 57 13 -0.05 0.07 -0.26 – 0.17 0.62 1.000 42.5 118 26 0.04 0.05 -0.11 – 0.18 0.64 1.000 64.175 150 53 -0.04 0.05 -0.16 – 0.09 0.74 1.000 79.875 114 92 0.02 0.03 -0.06 – 0.10 0.64 1.000 100.0 63 78 0.06 0.03 -0.01 – 0.13 2.22 0.145 p-values have been holm-adjusted for multiple comparisons within grade groups. ↩