I Have a Mouth and I am Screaming

How generative AI made me take to the loom

In late 2021 and late 2022 respectively, GitHub Copilot, an AI tool that could generate code, and OpenAI's ChatGPT, a general-purpose AI chatbot, were released. These tools became immensely popular and the technology behind them is being used for even more AI assistant tools.

There seems to be a lot of drama around these newfangled AIs. There is the zesty and exciting drama such as Google engineers being fired for claiming one of their chatbots is sentient and lawyers being caught using ChatGPT in legal cases. However, there is also some less exciting drama such as robot dogs with guns strapped to their backs and the use of AI in disinformation campaigns. There are also the current and real dangers AI automation already poses; however, these somewhat more realistic dangers seem to be evermore drowned out in all the hype and noise about recent advancements in AI autocompletion technologies (i.e. ChatGPT and friends).

My introduction to these tools, The ChatGPTs, was when I got beta access to Codex — one of OpenAI's GPT models trained specifically on code. My initial hopes for these tools were to use them to generate code explanations on the fly for students in interactive online course material; however, I was disappointed at the wild hit-or-miss nature of the tool and felt it wouldn't be appropriate. Since then, I've been interested in how schools and universities were going to respond to the almost overnight introduction of AI chatbots that seem to be able to mostly answer most of the novice-level questions typical of secondary and early tertiary study. The responses seem tense and varied with some schools outright banning the technology and some embracing it.

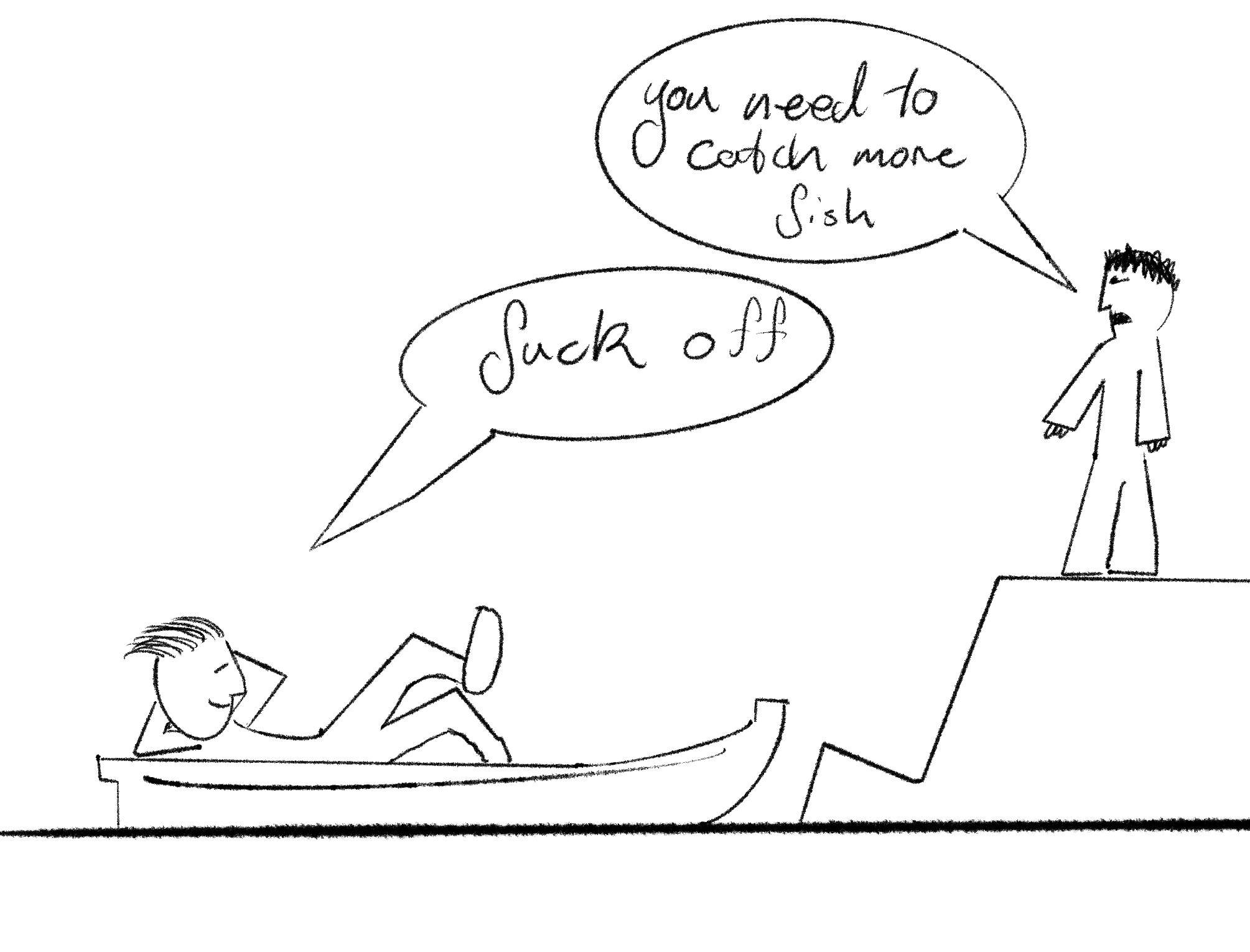

Part of me feels that overall there is absolutely no way educational institutions will gracefully handle the rapid introduction of everyday AI — although, perhaps these technologies will self-implode before they need to do anything about it1,2. There seems to be this awkward tension between some educators tentatively wanting to encourage students to use and explore these tools but zero-tolerance when it comes to assignments and exams. And attempts to enforce such policies seem to be laden with issues3.

There are, at least, glimmers of hope in the experience reports of educators who demonstrate to their students that The Chatbot doesn't know all, and maybe we shouldn't blindly trust it. However, this wisdom seems to be lost on many of the students I tutor with the phrase "But ChatGPT said-" being thrown my way just a few too many times.

It's Crunch Time

At the very outset of this whole LLM malarkey, I managed to slip in with a few papers looking at how Codex (one of the GPTs focused on programming) performed on first-year programming exercises4,5. Around this time, and perhaps because I had gotten myself involved with it, there seemed to be endless talk about how AI made developers more productive allowing them to squeeze out code at speeds never seen before.~

I also noticed a rather sharp shift in the research interests of many education researchers. It seems almost everyone has pounced on these emerging tools winding up endless projects exploring which mundane teaching processes can be automated away with some large language model — from writing bespoke worked examples to generating entire suites of exam questions. Some researchers even seemed to ditch their prior research interests in favour of becoming full-time generative AI proselytizers and researchers. It is hard not to feel something in me has died when I think about it.

I started to wonder what all this code and automation was actually for. Why do I need to blast out code 55% faster than I already write it?~ Why do I need some f***ing chatbot to come up with a worked example for me?~ Who benefits from all of this? Am I being purportedly more productive for what — my own pleasure? Can't we all just be happy with what we have and sing a song holding hands in a big circle or something?!~

Who Are We Serving?

After vaguely being involved in a few more studies to do with The GPTs, I felt myself ever more becoming sympathetic to the attitudes of the neo-luddites. I certainly had reservations about the robustness of these tools given my initial experiences with them, but I was starting to feel a little uncomfortable with just how readily people were willing to adopt these tools so early on — particularly in educational settings.

Beyond disliking how these new AI chatbots were being used in education and programming, I had a feeling things had generally gone too far. It still shocks me how readily people are willing to be told that they vaguely need to be more productive and that some new technological augment is the answer to all their woes.

People talk of an "AI Revolution" following on from the Agricultural and Industrial revolutions. However, if we can call what is happening now a "revolution", it is not going to look like the domestication of crops or workers going into the factories. We're probably not going to see some radical improvement in material wealth and well-being. If there is an AI Revolution, It is probably going to look like bored corporation workers using integrated chatbots slightly more as they work for evermore abstracted bureaucracies.

Since the beginning I, somewhat pessimistically, have felt that in the long run, these generative AI models won't be much of a big deal — certainly they are not going to manifest some kind of technological utopia as people seem to claim. I have been left with a vague yet uncomfortable feeling that these tools are just another way to suck the life and joy out of work in the name of boundless growth and productivity.

I Started Weaving

The introduction of these new AI chatbots made me start to reflect on work and education in general. It feels like everything is turning into a mad dash to do things more and faster — but it's not clear what we are doing everything more and faster for. There's already a view among youths and others that the world is dying and that growth mindsets are unsustainable. I'm not really sure this new AI business is really going to help with any of that. It feels like some new toy to keep us busy.

I wanted to step away from it all and slow down — to purposely reject the rapid and undirected growth and production that seems to be expected these days. I don't want to be told that I could be more productive if I use some random chatbot or code-completing plugin. And, I certainly don't think that attitude is necessary in the education space where it seems like I'm being constantly blasted from all angles with papers proposing some zany new way large language models could be used in the classroom.

Perhaps the neo-luddites have a point — fuck it, maybe even the luddites had a point. Maybe all of this has just gone too far. Perhaps somewhere between cottage industries and the establishment of Shein, there is a happy middle-ground in which we can work and produce within our means (and, at this point, I'm leaning a bit more towards cottage industry).

I was already vaguely familiar with movements such as the slow movement, and it's many offshoots such as slow software. But I was in crisis mode and looking for an escape. Somehow, through all of this, I decided the answer to all my problems would be to buy a loom and to start weaving. I have no idea why. Who knows. Either way, I bought a loom, and it has been good.

In a way, it feels like an intentional assault on a fast-paced world that demands productivity. It has been oddly rewarding to slow down and methodically work through projects. Not to mention the cool new tea towels I've made. I know it sounds dramatic, but I did genuinely hate the readiness with which the world accepted tools like ChatGPT and GitHub Copilot in the name of unbounded productivity. Slowing down has given me a way to disconnect from all of that and ask which other parts of my life I could slow down as well.

Footnotes

-

Chen, L., Zaharia, M., & Zou, J. (2023). How is ChatGPT’s behavior changing over time? arXiv [Cs.CL]. Retrieved from http://arxiv.org/abs/2307.09009 ↩

-

Shumailov, I., Shumaylov, Z., Zhao, Y., Gal, Y., Papernot, N., & Anderson, R. (2023). Model Dementia: Generated Data Makes Models Forget. arXiv preprint arXiv:2305.17493. https://doi.org/10.48550/arXiv.2305.17493 ↩

-

Liang, W., Yuksekgonul ,M., Mao, Y., Wu, E., & Zou, J. (2023) GPT detectors are biased against non-native English writers, Cell Press. https://doi.org/10.1016/j.patter.2023.100779 ↩

-

Finnie-Ansley, J., Denny, P., Becker, B. A., Luxton-Reilly, A., & Prather, J. (2022). The Robots Are Coming: Exploring the Implications of OpenAI Codex on Introductory Programming. Proceedings of the 24th Australasian Computing Education Conference, 10–19. Presented at the Virtual Event, Australia. https://doi.org/10.1145/3511861.3511863 ↩

-

Finnie-Ansley, J., Denny, P., Luxton-Reilly, A., Santos, E. A., Prather, J., & Becker, B. A. (2023). My AI Wants to Know If This Will Be on the Exam: Testing OpenAI’s Codex on CS2 Programming Exercises. Proceedings of the 25th Australasian Computing Education Conference, 97–104. Presented at the Melbourne, VIC, Australia. https://doi.org/10.1145/3576123.3576134 ↩